In light of the recent events of noticing some odd PHP being added to my site I’ve acknowledged my need to do routing file integrity checks on files. Aside from from obviously suspicious lines of php being prepended or appended to legitimate php files, there were also completely new php files that were created. Supposedly, it happened before I got BPS Pro from AITpro installed and running. However, the awesome Bluehost systems also failed to detect such malware.

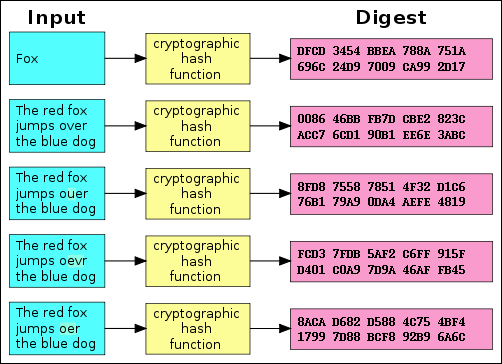

Unfortunately, Bluehost doesn’t have ClamAV available for it’s customers to be used in recurring scans. And I’m pretty sure “installing” some software of my own in my jail would very much discouraged. So, how does one detect when files are changed or added to a directory? In GNU Linux there’s many native digest algorithms that are installed to derive a hash or checksum of the data. The most commonly known are MD5 and SHA. The different SHA algorithms are being implemented as MD5’s successor. Naturally, if we document the checksum of the files we can tell if a file has been modified because it will also change checksum of the data. If a file is not in that document it could be defined as either new or excluded.

I’ve decided to create BASH shell script that I could add in to a cron job that would run routinely to let me know when something funky happens. For the means of getting familiar with SQLite, I’ve decided to have the script utilize a sqlite database and tables to document the information; i.e. File, checksum, and dates of events. However, down the road of making the script I’ve noticed that more than one file can have the same checksum. It was then that remembered/realized that would be acceptable. If you copy a file and rename one of them the checksums will still be the same. In my case, copies of an index.php designed to thwart directory listings was in place as a security implementation.

So while it’s a work in progress, the script where I discovered this is on my GITHUB repo. It occured because in the table to document the file info I was using the checksum as a Primary Key. And there can only be one Primary Key in a table. I’ll have to adapt my script to use the full file path and name as the Primary Key. Then I’ll be able to move on to the adding of new files and cross checking of checksums.